12 08 2015

Figure out how recover from split brain

Recently my whole rabbitmq cluster 3.5 crashed.

Generally just restart the last node up before the crash is enough. But sometimes not.

I would point out that I have learned in some worst case scenario with rabbitmq. I’m not going to talk about a simple node crash, rabbitmq handle this pretty well.

My example will describe disaster recovery. A cluster with HA durable queues and persistent messages. All nodes in the cluster where powered off in the “same time”. Now we have a problem.

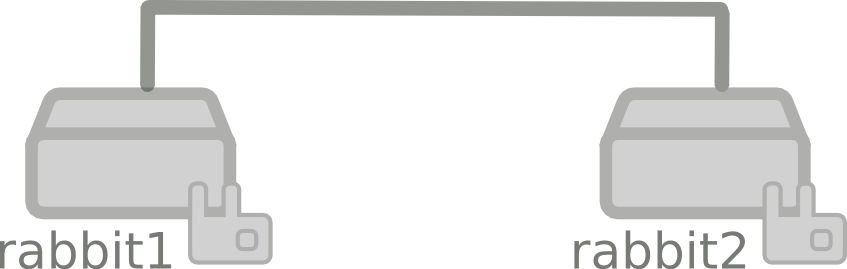

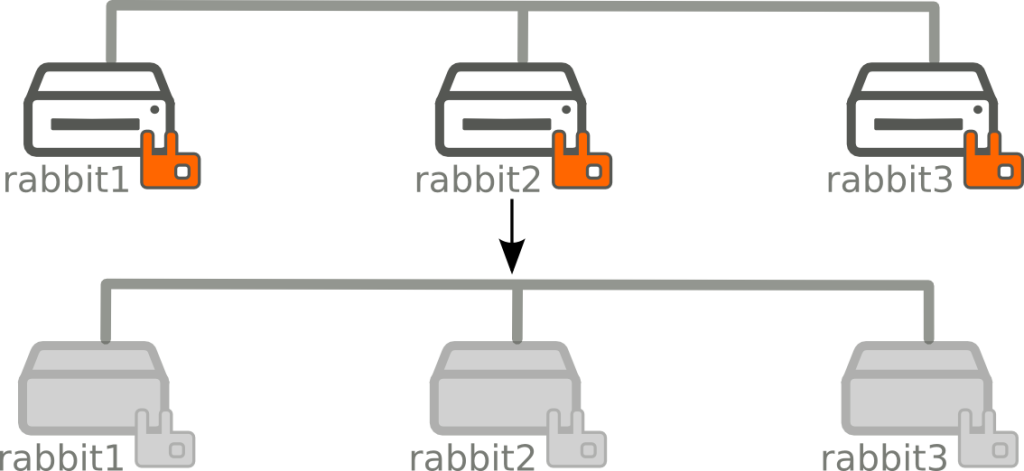

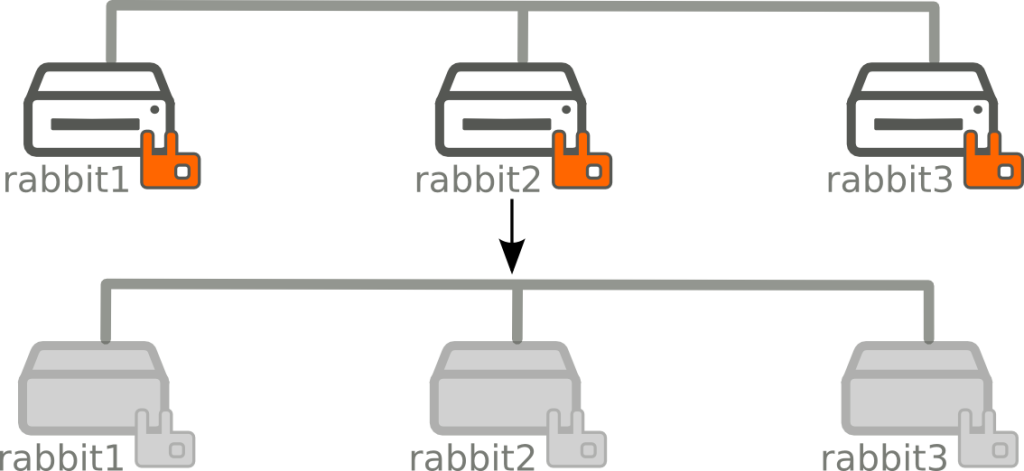

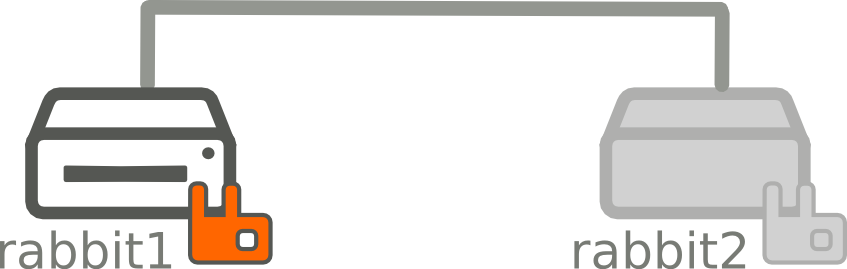

Cluster state before poweroff :

+--------------+----------+-----------+-------------------------------+----------------+---------+-------------------+

| name | messages | policy | synchronised_slave_nodes | node | durable | arguments.ha-mode |

+--------------+----------+-----------+-------------------------------+----------------+---------+-------------------+

| ha.queue_ha1 | 1 | ha-queues | rabbit@rabbit3 rabbit@rabbit2 | rabbit@rabbit1 | True | all |

| ha.queue_ha2 | 1 | ha-queues | rabbit@rabbit3 rabbit@rabbit1 | rabbit@rabbit2 | True | all |

| ha.queue_ha3 | 1 | ha-queues | rabbit@rabbit2 rabbit@rabbit1 | rabbit@rabbit3 | True | all |

Now I’m here… As a devops I need to do all I can to start this cluster again with all data.

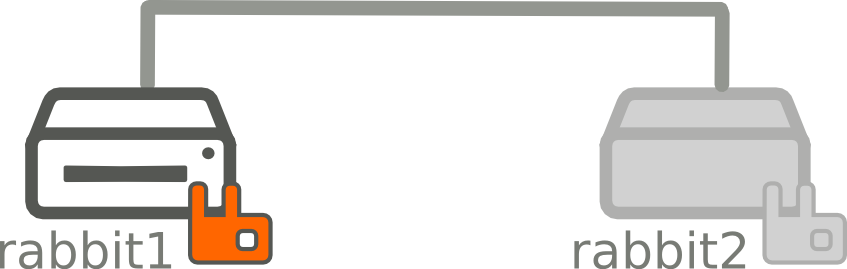

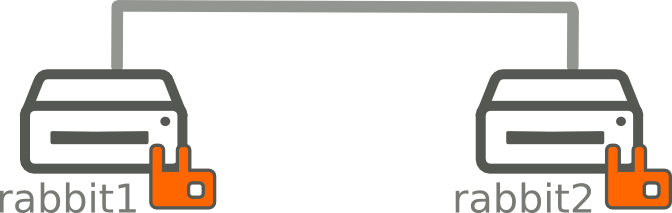

Case 1 : Cluster totally down. Nodes won’t start.

This case it’s pretty easy, start all nodes. (preferably the last node stopped in first. Sometime no rabbit node start.

Follow the official documentation (https://www.rabbitmq.com/man/rabbitmqctl.1.man.html#force_boot), simply force one node to start. And then start the others nodes.

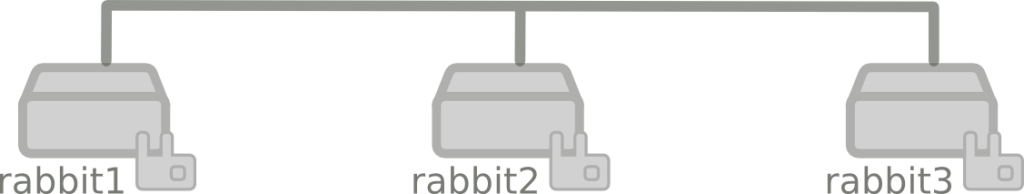

The actual state of this cluster is :

rabbit1:/# rabbitmqctl force_boot

If this solution don’t work on your rabbitmq version, try solutions at the end of this article in astuce section.

+--------------+----------+-----------+--------------------------+----------------+---------+-------------------+

| name | messages | policy | synchronised_slave_nodes | node | durable | arguments.ha-mode |

+--------------+----------+-----------+--------------------------+----------------+---------+-------------------+

| ha.queue_ha1 | 1 | ha-queues | | rabbit@rabbit1 | True | all |

| ha.queue_ha2 | | | | rabbit@rabbit2 | True | all |

| ha.queue_ha3 | | | | rabbit@rabbit3 | True | all |

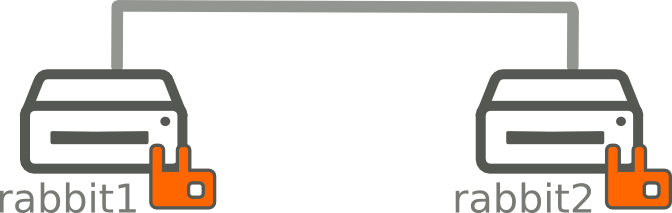

rabbit2 and rabbit3 should start normally :

rabbit2:/# /etc/init.d/rabbitmq-server start

rabbit3:/# /etc/init.d/rabbitmq-server start

+--------------+----------+-----------+-------------------------------+----------------+---------+-------------------+

| name | messages | policy | synchronised_slave_nodes | node | durable | arguments.ha-mode |

+--------------+----------+-----------+-------------------------------+----------------+---------+-------------------+

| ha.queue_ha1 | 1 | ha-queues | rabbit@rabbit3 rabbit@rabbit2 | rabbit@rabbit1 | True | all |

| ha.queue_ha2 | 1 | ha-queues | rabbit@rabbit3 rabbit@rabbit1 | rabbit@rabbit2 | True | all |

| ha.queue_ha3 | 1 | ha-queues | rabbit@rabbit2 rabbit@rabbit1 | rabbit@rabbit3 | True | all |

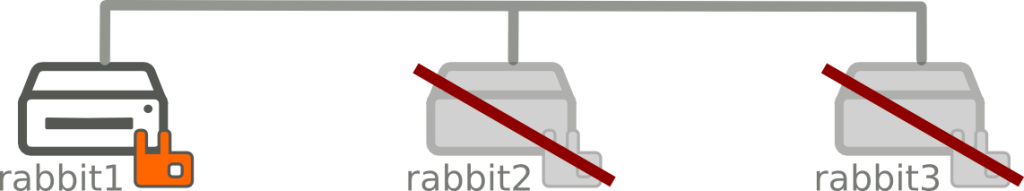

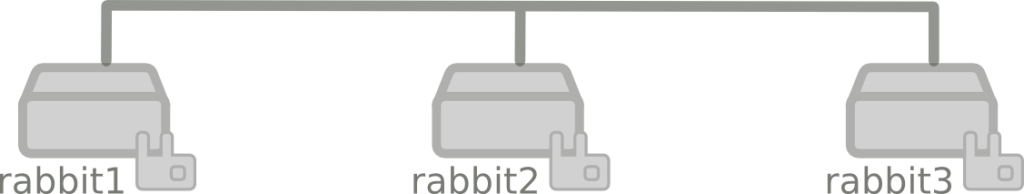

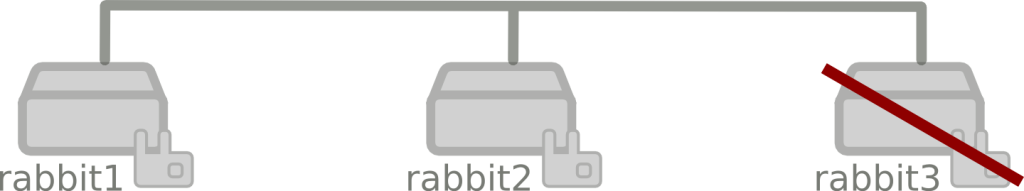

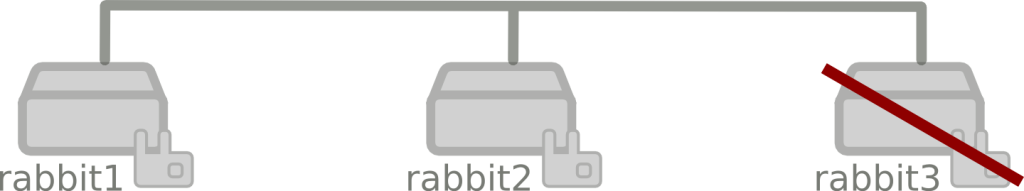

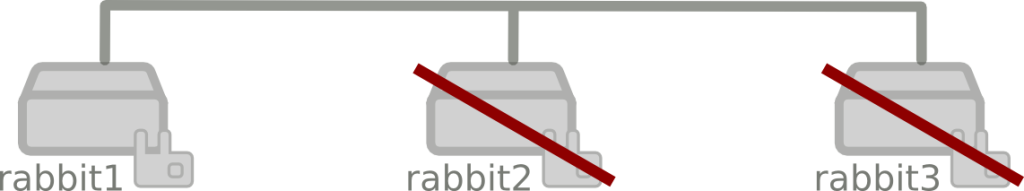

Case 2 : rabbit3 physically dead

My cluster is totally down and rabbit3 node is physically dead. The plan is to start the cluster without rabbit3 and promote a new master of queues where rabbit3 was the master before being powered off.

Before fix the cluster you should understand 2 important things (https://www.rabbitmq.com/ha.html#promotion-while-down) :

- Rabbitmq can only promote stopped slaves during forget_cluster_node to be the next master of the queues on forgotten node.

- When you force a node to boot, at startup rabbitmq automatically flush data of all queues where this node was not master.

It’s important to understand that RabbitMQ can only promote stopped slaves during forget_cluster_node, since any slaves that are started again will clear out their contents as described at “stopping nodes and synchronisation” above. Therefore when removing a lost master in a stopped cluster, you must invoke rabbitmqctl forget_cluster_node before starting slaves again

For these reasons, take care to appropriately remove dead node as describe below before start all nodes of your cluster.

For version 3.3, I use a dirty hack to keep queue of rabbit3 (See below). Note : I tried to force boot rabbit1 and forgot rabbit3. But when rabbit2 started, rabbit2 was not be promoted to be the new master of rabbit3 queues like it was in the new version of rabbitmq.

First step

Remove dead node from the cluster

Connect on rabbit1 and remove rabbit3 from the cluster :

# If rabbit1 is stopped

rabbit1:/# rabbitmqctl forget_cluster_node --offline rabbit@rabbit3

#If rabbit1 is started

rabbit1:/# rabbitmqctl forget_cluster_node rabbit@rabbit3

Note : No need to execute force_boot command on new rabbitmq version. Force boot is included when you launch forget_cluster_node.

You can now start rabbit1

rabbit1:/# /etc/init.d/rabbitmq-server start

+--------------+----------+-----------+--------------------------+----------------+---------+-------------------+

| name | messages | policy | synchronised_slave_nodes | node | durable | arguments.ha-mode |

+--------------+----------+-----------+--------------------------+----------------+---------+-------------------+

| ha.queue_ha1 | 1 | ha-queues | | rabbit@rabbit1 | True | all |

| ha.queue_ha2 | | | | rabbit@rabbit2 | True | all |

| ha.queue_ha3 | | | | rabbit@rabbit2 | True | all |

Dirty hack for version 3.3

rabbit1:/# touch /var/lib/rabbitmq/mnesia/rabbit@rabbit1/force_load

# Dirty hack

rabbit1:/# sed -i 's/rabbit3/rabbit1/g' /var/lib/rabbitmq/mnesia/rabbit@rabbit1/LATEST.LOG

rabbit1:/# /etc/init.d/rabbitmq-server start

rabbit1:/# rabbitmqctl forget_cluster_node rabbit@rabbit3

Second step

start other nodes of the cluster

rabbit2:/# /etc/init.d/rabbitmq-server start

+--------------+----------+-----------+--------------------------+----------------+---------+-------------------+

| name | messages | policy | synchronised_slave_nodes | node | durable | arguments.ha-mode |

+--------------+----------+-----------+--------------------------+----------------+---------+-------------------+

| ha.queue_ha1 | 1 | ha-queues | rabbit@rabbit2 | rabbit@rabbit1 | True | all |

| ha.queue_ha2 | 1 | ha-queues | rabbit@rabbit1 | rabbit@rabbit2 | True | all |

| ha.queue_ha3 | 1 | ha-queues | rabbit@rabbit1 | rabbit@rabbit2 | True | all |

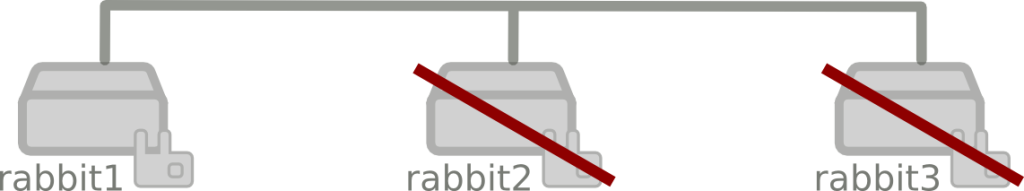

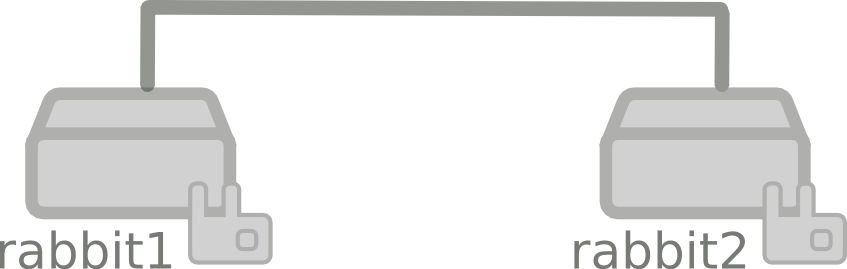

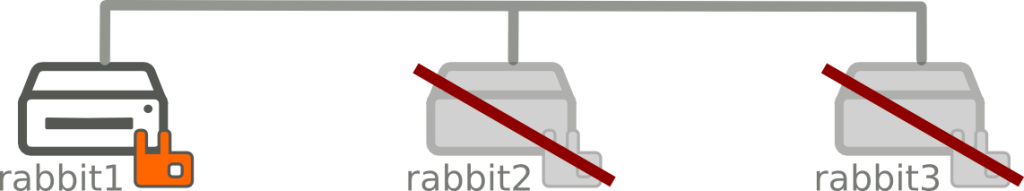

Case 3 : Two node physically dead. Only one node remaining.

In case you need to start only one node from a cluster.

I think you should not use this dirty hack, but it’s good to know that is possible.

If you simply try to force boot rabbit1, rabbit1 will flush all queues of rabbit2 and 3.

The idea is to tell to make believe to rabbit1 that it is the master of rabbit2 and 3 queues.

Firstly : Make believe rabbit1 that it is the master. And force it to start

rabbit1:/# sed -i 's/rabbit2/rabbit1/g;s/rabbit3/rabbit1/g' /var/lib/rabbitmq/mnesia/rabbit@rabbit1/LATEST.LOG

rabbit1:/# rabbitmqctl force_boot

# Or this this for old rabbitmq version

rabbit1:/# touch /var/lib/rabbitmq/mnesia/rabbit@rabbit1/force_load

rabbit1:/# /etc/init.d/rabbitmq-server start

Secondly : Remove old nodes from the cluster

rabbitmqctl forget_cluster_node rabbit@rabbit2

rabbitmqctl forget_cluster_node rabbit@rabbit3

+--------------+----------+-----------+--------------------------+----------------+---------+-------------------+

| name | messages | policy | synchronised_slave_nodes | node | durable | arguments.ha-mode |

+--------------+----------+-----------+--------------------------+----------------+---------+-------------------+

| ha.queue_ha1 | 1 | ha-queues | | rabbit@rabbit1 | True | all |

| ha.queue_ha2 | 1 | ha-queues | | rabbit@rabbit1 | True | all |

| ha.queue_ha3 | 1 | ha-queues | | rabbit@rabbit1 | True | all |

Magic! you have all your messages !

Astuce

Alternative way to force a rabbitmq cluster to start :

echo "RABBITMQ_NODE_ONLY=true" > /etc/rabbitmq/rabbitmq-env.conf

/etc/init.d/rabbitmq-server start &

# Do your things needed like forget node

rabbitmqctl forget_cluster_node --offline rabbit@rabbit3

rabbitmqctl stop

rm /etc/rabbitmq/rabbitmq-env.conf

/etc/init.d/rabbitmq-server start

Way 2 :

rabbit1:/# touch /var/lib/rabbitmq/mnesia/rabbit@rabbit1/force_load

rabbit1:/# /etc/init.d/rabbitmq-server start

debug, devops, openstack, rabbitmq, troubleshooting