8 08 2016

Continuous integration for Ansible

We know a lot of people who write or use ansible role and playbook but only few of them think about implement tests.

Typically playbooks or roles are written in a VM and tested each time on the same VM until the playbook says “OK” everywhere. In the best case, the VM is sometime flushed to validate the whole playbook.

In case of using a role, might be afraid to update or modify it because it is used in a lot of app you dont want to break.

This is a problem, It’s difficult to contribute or use a non tested role or playbook. In order to validate your changes, you have to spawn a VM, run ansible and validate manually your change. This is bad, with that way, you might have regression or break something else.

This is why I’m going to introduce you a way to maintain ansible role and playbook and have a continuous integration. In over words, how test each ansible changes before use it in production environment.

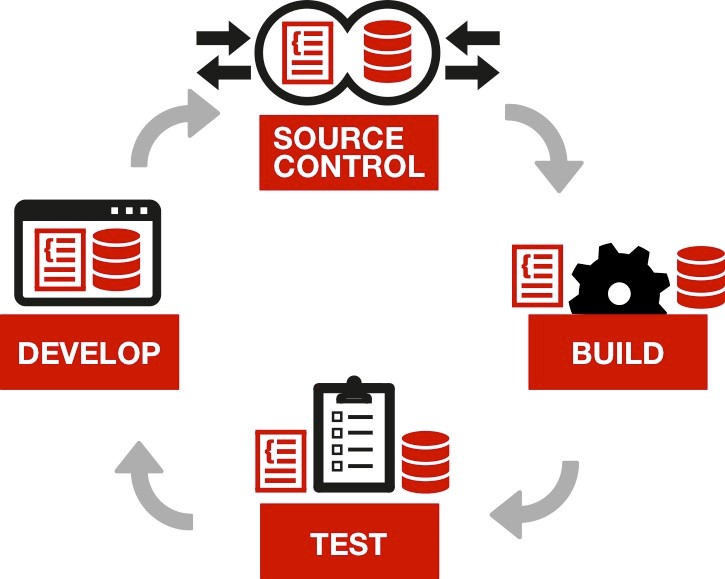

Now apply continuous integration to ansible. We will discuss about these main steps :

- Write code

- Validate code

- Tests

- Run tests

Write code

I’m not going to explain you how to write your code, but point you out a way to make easier contributor’s life and keep a consistent code style.

The solution is very simple, just think about providing Style guide or best practices guide. A good exemple :

- https://github.com/openshift/openshift-ansible/blob/master/docs/style_guide.adoc

- https://github.com/openshift/openshift-ansible/blob/master/docs/best_practices_guide.adoc

Help people to know what is the code style of the project will avoid you to waste time during pull request review.

Validate code

Run unit and functionnal tests might require some works to your CI. Create a new container, install dependancies and launch all your stuff. This can make you waste time and resource.

to avoid losing time, you should ensure the code is well formatted and have no syntax error. There are tools for a lot of languages to do that. For example Pylint for Python or Puppet-lint for puppet.

For ansible we also have a ansible lint (https://github.com/willthames/ansible-lint). The Ansible lint check script is not very powerful for now but it could help to save some time.

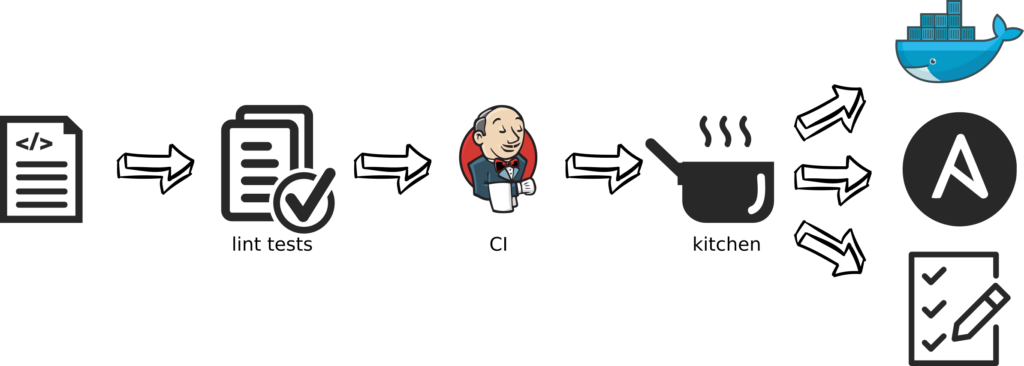

Ansible lint should be the first test done by your CI. I also recommend you to run it on client side before push the code in the CI. A good idea is to add it in the git pre-commit hook (https://git-scm.com/book/en/v2/Customizing-Git-Git-Hooks).

Usage example :

In that example, we can see a missed trailing whitespace at the end of line 15.

Tests

Start to think about what we want to test and how to test it.

I see two main case we want to test :

- Each new changes in a playbook or role. Validate that the new change doesn’t break things.

- Ensure if we run ansible for a new setup or run again ansible on a previews platform, after ansible, all components should be in the expected state. For example, we setup nginx, after run ansible we should find a nginx running on the system and listening on port 80 (second run should have only ok status).

Ok, how to validate these points.

Before working with ansible, I worked with Puppet. Naturally, I started to use the same tool we used for Puppet (Serverspec). Serverspec is one of the most popular tool used with Puppet to validate that all things gone well after puppet run.

Although Serverspec is a good tool to check the status of several elements in your platform, I was not totally convinced that was the best solution.

We experienced some difficulties to share custom variables or sync defaults variables between puppet manifests and serverspec rules. This is why I looked if there is an other way to deal with ansible.

The other way I found interesting was Ansible test strategies (http://docs.ansible.com/ansible/test_strategies.html). The idea is to test ansible role or playbook with ansible itself. Execute command, action to validate the result on the installed server or during the installation.

I like that you don’t need to setup an external tool to validate a platform. It’s also easy to access to all variables used to setup the platform and take care of that for tests.

You can use Ansible test strategies inside a role or a playbook. For example in a check.yml or on tagged tasks. That allow you to run tests during the setup to rollback faster in case of an unexpected fail. It will also provide a way to run only check tests on a platform playing with ansible tags.

To resume you have two approach of tests :

- Internal : Validate the installed platform with the installation tool itself (ansible in this case).

- Pro : Easy to access to installation variables and apply adapted tests. Easy to add conditional test following a role behavior.

- Con : You need to trust the same tool. Implement tests for a role that you can’t modify asks you to organize your way of working.

- External : Validate the installed platform with an external tool.

- Pro : Totally independaet to validate a platform.

- Con : Not always easy to maintain and share variables between several tools.

I think the internal way is the best to test a role. It’s easier to know what are expected and use the same conditions to test each use cases.

For a playbook, use internal and external could be a good idea. Internal way allow you to run intermediary tests and check that all things goes well during the playbook. It also help to validate specific value depending of ansible variables. While external can help you to validate the wole expected result is correct. An other example, you want to change a role A in the playbook for a role B because A is not maintained anymore. With external tests, simply replace the role and run the same test to validate if the result of B == A.

Run tests

To test a role or playbook ansible and validate all step, it’s better to do it in a new and clean environment. We don’t want remaining things between 2 tests.

The approach is to use a virtual a environment to test the entire playbook and then reset it for the next test.

The type of your virtual environment will depend heavily on the content of your playbook. In most of case containers have the advantage of being lighter than full virtualization and will save you time and resources. In some cases, you might need full virtualization for example if your playbook use device (disk, …) or need kernel module.

Regarding my needs, I decided to use container and specifically Docker to run my tests.

At this point there is a last remaining thing to choose. How provision my container(s) and run tests.

My first idea was to use ansible with the Docker module (http://docs.ansible.com/ansible/docker_module.html) but for this, I needed to write some extra code to spawn containers, run my tests and generate a report. I wouldn’t want to maintain this extra code.

After all I decided to go for kitchen.ci (http://kitchen.ci/).

What is Test Kitchen?

Test Kitchen is a test harness tool to execute your configured code on one or more platforms in isolation. A driver plugin architecture is used which lets you run your code on various cloud providers and virtualization technologies such as Amazon EC2, Blue Box, CloudStack, Digital Ocean, Rackspace, OpenStack, Vagrant, Docker, LXC containers, and more. Many testing frameworks are already supported out of the box including Bats, shUnit2, RSpec, Serverspec, with others being created weekly.

For me the advantages are :

- Agnostic : Kitchen can deal with multiple backends (Docker, Openstack, Vagrant).

- Handle dependancy setup in a base image (ansible, serverspec, …)

- Simplify how to deal with several use cases scenario.

- Offer multiple steps (create, converge, verify)

- Provide an easy way to connect to containers

The perfect tool does not exist, kitchen.ci is mainly focus on running test for Chef on Vagrant. So if you want to run a test on several containers for Ansible with Docker, you need to hack a little bit the default behavior.

Summary

Basic workflow implementation sould at least validate the code and then test it in a clean environment.

In my example, I validate the code with ansible lint, use git hook on jenkins to trigger tests. Use kitchen.ci to run ansible in Docker container, test the setup with ansible test-strategy and then run post tests with serverspec.

It’s not the only solution, you can add a lot of things more but it’s a good start.

For example a task to check Role idempotence : http://www.jeffgeerling.com/blog/testing-ansible-roles-travis-ci-github

Another important test is the idempotence test—does the role change anything if it runs a second time? It should not, since all tasks you perform via Ansible should be idempotent (ensuring a static/unchanging configuration on subsequent runs with the same settings).

# Run the role/playbook again, checking to make sure it’s idempotent.

– >

ansible-playbook -i tests/inventory tests/test.yml –connection=local –sudo

| grep -q ‘changed=0.*failed=0’

&& (echo ‘Idempotence test: pass’ && exit 0)

|| (echo ‘Idempotence test: fail’ && exit 1)

Example

Now stop talking and have a look on 2 examples.

- First one is a playbook to install a web frontend (nginx) and backend (php-fpm). Do not use this playbook in production. It’s only to show you the interaction between containers.

- The second one is a role for installing ansible on a host.

In your case you might not need this kind of configuration and simply run test locally on the first container.

All kitchen documentation can be found here :

- http://kitchen.ci/docs/getting-started/

- https://github.com/portertech/kitchen-docker

- https://github.com/neillturner/kitchen-ansible

I installed it with :

Playbook

I will skip the obvious part of using Ansible lint in a git hook and Jenkins jobs to be focus on the tricky part : Kitchen.ci and Ansible tests.

Code of this playbook can be found here : https://github.com/gaelL/kitchen_ci/tree/master/playbook_test

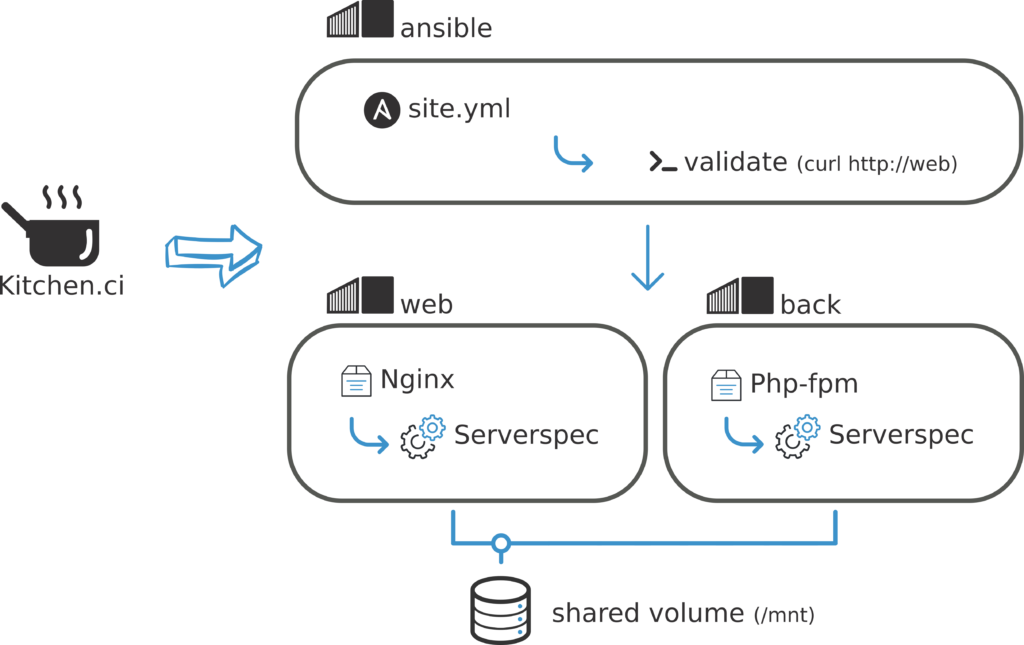

Kitchen.ci will handle Docker container creation. In this example we create 3 containers and one shared volume.

- ansible (bastion) : Container in charge of running ansible command. I consider it as a ansible launcher only. I do not install things on it.

- web : Container in which we setup the nginx web server that listen on port 80

- back : Backend container with php-fpm installed on it

- shared volume : Shared volume mounted in /mnt on back and web containing the php code.

Look at this configuration : https://github.com/gaelL/kitchen_ci/blob/master/playbook_test/.kitchen.yml

I tried to comment inline all tricky things. But to resume :

- Kitchen will create these 3 containers and run ansible-playbook command with site.yml from ansible container.

- At the end we have 2 installed containers by ansible (web and back)

- The playbook use ansible testing strategies to validate the setup itself.

- Web and back containers use Serverspec to validate containers after the playbook ran

- Also optionally, I used Bats (Bash Automated Testing System) on ansible container to let you know that it’s allowed to use other stuff than Serverspec.

- Use a custom ansible inventory file for the test kitchen-hosts

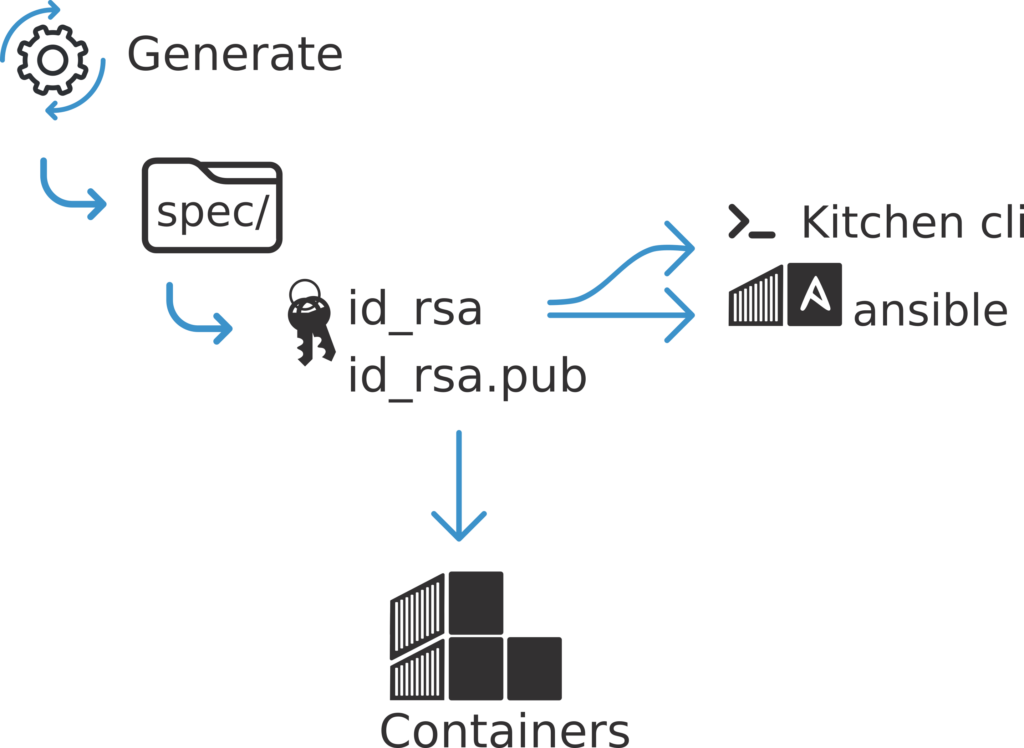

Before going further i’m going to explain a key point in this configuration. As we saw, kitchen.ci is not written to spawn a platform of multiple containers for the same test. So I’m playing with “suites” to do this job. And the biggest question is : How share a ssh key to run ansible through all containers ?

I tried a lot of solution with pre_command, … and the proper way I found to do it is to use kitchen ci ssh key generated for the kitchen cli.

By default when you call kitchen create, kitchen create a ssh key pair in the .kitchen directory. This keypair is used for example during a kitchen login.

This tell us that Kitchen generate a key pair, add the public key inside all containers .ssh/authorized_keys and use the private key with the kitchen cli.

Reading kitchen ansible document we have private_key option to specify a local private key to run ansible inside the container.

How use private key from the cli inside our container ?

As I know during converge step, kitchen copy some directory inside the container like the spec directory. The simple way is to tell kitchen cli to generate ssh key pair in the spec directory. This directory will be copied at converge in the ansible container and then the private key can be used to run ansible.

Which give us this configuration :

Post tests : Don’t hesitate to have a look on post test run after ansible (Serverspec and Bats). For example on the web container to check nginx is running and listening on port 80

Now run it and let the magic do begin :

Role

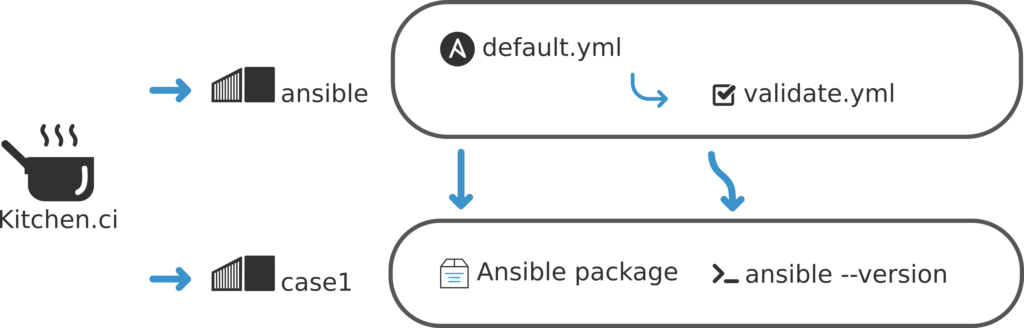

Running kitchen for a role is pretty close to a playbook. I will be brief and only explain differences.

https://github.com/gaelL/kitchen_ci/tree/master/role_test

The goal of the role is to setup ansible on a remote host. I used the same solution as the playbook, I create an ansible container to run ansible. And a second container (case1) on which I want to install ansible.

Note : This role already use ansible testing strategy to run ansible –version command on the targeted container.

Kitchen configuration here :

https://github.com/gaelL/kitchen_ci/blob/master/role_test/.kitchen.yml

As you probably noticed I don’t use ansible inventory file and no playbook are specified.

- Ansible inventory : I decided to not write a fixed inventory for the test and embed it dynamically in my playbook with ansible add_host module

- Playbook : I used the native kitchen ansible behavior and create a dedicated playbook to test my role inside the test directory.

The test playbook is here : https://github.com/gaelL/kitchen_ci/blob/master/role_test/test/integration/ansible/default.yml

Note that the role name in the playbook is referring to the directory path specified in the .kitchen.yml config file roles_path: ../role_test. The default dehavior is to copy the current directory inside the ansible roles directory.

Nothing much to say, it’s pretty close to the playbook test. The cool fact for role test is that all your test files can be defined inside the test directory.

Run it

Tips

One : CI tests should run quickly. To accelerate it don’t hesitate to pre-build image for the bastion container with a pre-installed ansible and dependencies.

How I created mine :

Two : On my CI, I have more enough memory but bad hard drive performances. I don’t need to have persistent data of my containers. Change docker storage configuration to use a tmpfs had really improved the test time. (be careful with that)

Three : How have multiple test case for the same role. We saw I use a ansible and case1 container. Docker can’t have 2 containers with the same name. Think about that when you configure kitchen. Have a prefix could be a good idea e.g (<rolen_ame><case_number><container_name>) .

Four : For troubleshooting purpose it’s possible to run ansible manually after a converge

Five : Ansible have a native small check for syntax. It could be the first step four your CI pipeline

Six : Know all default variable for your kitchen ci configuration file. This command will display your yaml config file with all variables used by kitchen ci.

Thanks for reading !

Openshift registry on Amazon S3 Concourse lazy pipeline templating for free ?